Why the Next Wave of AI Is Really a Distributed-Systems Story

• When you strip away the hype, an AI agent is nothing more than an autonomous software process that receives a message, performs work, and produces another message.

• That is precisely the definition of a distributed-systems component

Prologue

Your boss says to ship a chatbot to handle those simple customer service queries.

It’s a single bot with list of static text documents. All good, self contained, stateless, easy to scale.

But then pressure arrives to push the needle, requirements expand:

- Query a customer database to personalise answers.

- Translate replies for international users.

- Invoke ticketing and payment APIs to execute real tasks.

The bot becomes a jack-of-all-trades with no clear structure.

It juggles roles and context all at once, and you start to feel the strain in a few clear ways:

- Flows become harder to debug and maintain

- Prompts get longer and harder to manage

- It’s unclear which part of the bot is responsible for what

- Adding a new use case risks breaking what’s already working

That single-agent model starts to fall apart.

Hold on, maybe we need to rethink this.

We need to extract out the responsibilities into multiple specialized agents.

Each agent is focused on a single task — planning, research, data fetching, user interaction etc

Each agent is easier to develop, debug, test etc.

Okay, Let’s Define “Agent” Before the Committee Does

IBM says an agent is “a system that autonomously does tasks for a user or another system.”

You know what else fits that description? A cron job. But fine, we’ll roll with it.

An agent, in practice, is a long-running service that:

- Listens on some endpoint (HTTP, Kafka, can with a string).

- Parses a message (sometimes structured, sometimes interpretive dance).

- Does work—maybe calls an API, maybe ponders the meaning of life via GPT-4o.

- Emits another message.

Single Responsibility

Ok, no need to reinvent the wheel here again, agents are just software components, and we have plenty of prior art to lean on.

We break up the monolithic bot into smaller, focused agents.

Each agent has a single responsibility, a single reason to change, is pluggable, easier to maintain etc etc.

We connect them all up and hey presto, problem solved

Lots of Bots, Lots of Problems

So you get a PlannerAgent, FlightAgent, HotelAgent, SnarkAgent - all yakking at each other until they deadlock.

The objective is to allow Agents to invoke other Agents.

Ok makes sense, but anyone who has worked on distributed systems knows this is where the fun begins.

What if Agent A calls/waits on Agent B, and Agent B calls/waits on Agent C, and Agent C calls/waits on Agent A?

We have a circular dependency. Deadlock.

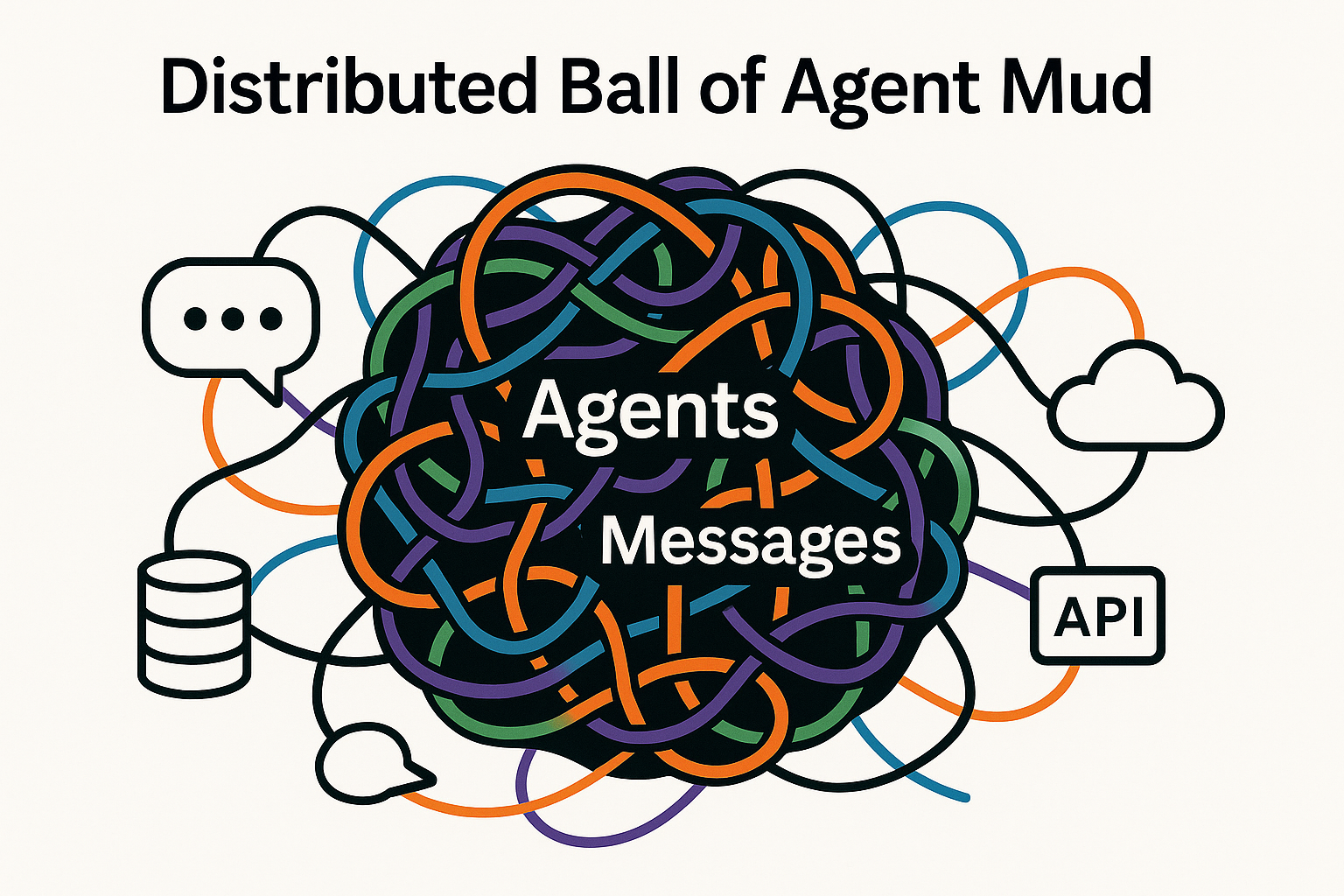

Distributed Ball Of Agent Mud

Now we have agents calling apis and agents calling agents calling agents etc etc.

But how do we coordinate all these agents ?

When should they stop ?

If Agent A calls Agent B, and Agent B calls Agent C and Agent C calls Agent A… Whoops ?

Distributed Ball Of Agent Mud

The Distributed-Systems Smorgasbord

The moment you have more than one agent, you have a distributed system with all the usual suspects:

- Latency - “Why is OrderAgent waiting 900 ms for TranslateAgent?”

- Schema - “Oops, BillingAgent shipped a new JSON field without telling anyone.”

- Partial-Failure - “ClassifierAgent is 502ing; do we retry, compensate, or pray?”

- Observability - “Trace-ID? Never heard of her.”

Spoiler: AI magic dust does not exorcise these demons.

You still need process managers, routing slips, idempotent receivers, dead-letter queues, and logs that someone actually reads.

Process Manager

Distributed Systems Platforms

Ok, so we see the potential for agents… there’s hype for sure but this is a race we need to be in.

There are unknowns sure, but let’s deal with a problem at hand: distributed systems.

Why focus on Dapr specifically? Because it’s one of the few platforms that provides all the building blocks we need for agent systems out of the box, without vendor lock-in. It’s open source, cloud-agnostic, and already battle-tested in production distributed systems.

It has building blocks we can grab to handle some of the challenges we’ve identified:

- PubSub — event-driven communication between agents

- State Stores — durable state for long-running workflows

- Actors — lightweight, isolated execution units

- Workflows — orchestration with retries, timeouts, compensation

- Secrets — secure credential management

- Service Invocation — reliable RPC with retries and circuit breakers

It inverts the responsibility for interfacing with infrastructure. For example, you don’t need to interface directly with Kafka — Dapr proxies it while maintaining the semantics.

This architectural principle is incredibly powerful: your agent code stays clean while the platform handles the distributed systems complexity.

And they’ve taken a step into our world.

Dapr Agents: Production Plumbing So You Can Sleep at Night

The Dapr folks looked at all the Python scripts masquerading as Agent Frameworks and said, “Hold my YAML.”

They bolted agents onto the Dapr building blocks.

Highlights:

- Tiny actors: millions per node. Good luck doing that with heavyweight containers.

- Workflow API: retries, timeouts, compensation—because an LLM will hallucinate an invoice at 3 a.m., guaranteed.

- Cloud-neutral: runs on Kubernetes, your laptop, or a potato—doesn’t care.

- A2A adapter: speaks the new lingua franca out of the box.

It’s basically Spring Boot for agents, minus the XML and the existential dread.

Architectural Takeaways (Tattoo These Somewhere)

- Agents = services. Give them versioned interfaces, proper monitoring, and a budget.

- Use proven patterns. Idempotent receivers, circuit breakers, sagas — you already know this stuff. See Enterprise Integration Patterns for the canonical reference.

- Expect hallucinations. Add validator agents or human review before an AI refunds $1 million by accident.

- Trace everything. If you can’t answer “Where did this request go?” you’re already in trouble. OpenTelemetry is your friend.

- Standardise early. Adopt A2A (or whatever wins) so you’re not building Babel 2.0.

Epilogue: Huge Potential, Dont Forget What We Already Know

We’ve come full circle.

Agents have vast potential, we’ll have to see how it plays out.

But the reality is they are absolutely distributed-systems.

If you ignore that, you’ll end up with a colourful, sentient, very expensive Ball of Agent Mud.

But if you treat agents like micro-services—with contracts, retries, metrics, and yes, budget constraints—you’ll be fine.

And hey, maybe this time we’ll keep the lessons longer than a decade.

Now go write some code. But please, for the love of uptime, add a trace-id.

In This Series

This post is the first in a series exploring how distributed systems principles apply to AI agents:

- Agents Are Still Just Software (this post) — the foundational “why”

- Agents, Routing, Patterns, and Actors — message routing patterns and the actor model

- From Process Managers to Stable Agent Workflows — stability patterns for production systems

- Database Transactions in LangGraph Workflows — keeping state consistent across systems